In our research project we’ll develop a robotic platform for spinal surgery which uses algorithms developed by Deep Learning (AI). The developed algorithms will transform high resolution preoperative 3D images, like CT scans and MRIs, to high resolution images of the patient in his or her new physical laying position during surgery. The novel part of the proposed procedure is the significant reduction of the use of cancerogenous ionizing x-ray beams during surgery, like CT-scans, while still being able to perform sub-millimeter surgery and catheter tracking.

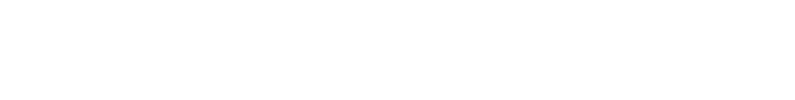

3-step surgical procedure

Defining the concept

The physical laying position of the patient changes before, at the start and during surgery, which has an impact on the physical position and form of the spinal cord. All these changes in position need to be taken into account in order to perform sub-mm surgery.

- Before: the patient is laying on its back for high resolution CT/MRI scans.

- At the start: the patient is laying on his/her stomach.

- During: the patient is laying on its stomach and slightly moves because of breathing, heartbeat and the impact of the surgery itself.

Overview of the patient’s physical position before, at the start and during surgery © VERHAERT

Overview of the patient’s physical position before, at the start and during surgery © VERHAERT

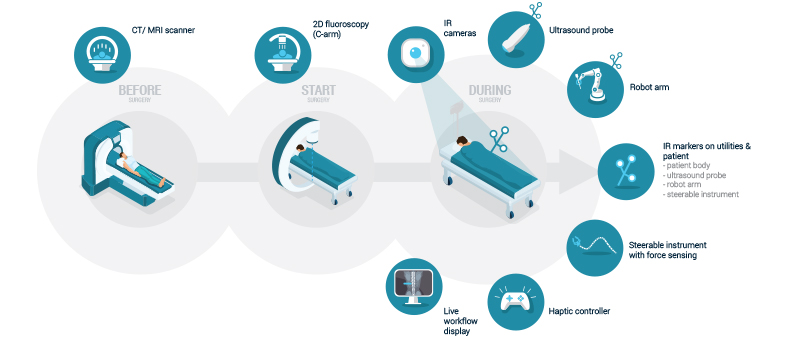

Before surgery

A high resolution sub millimeter 3D image is taken from the patient several days before surgery. This is done either by a CT-scan or MRI. Typically, the scan is taken while the patient is laying on his/her back. Based on the image the surgery is planned and a trajectory is calculated in order to reach the desired location in the spinal cord.

Before surgery the patient is lying on its back for high resolution CT/MRI scans © VERHAERT

Before surgery the patient is lying on its back for high resolution CT/MRI scans © VERHAERT

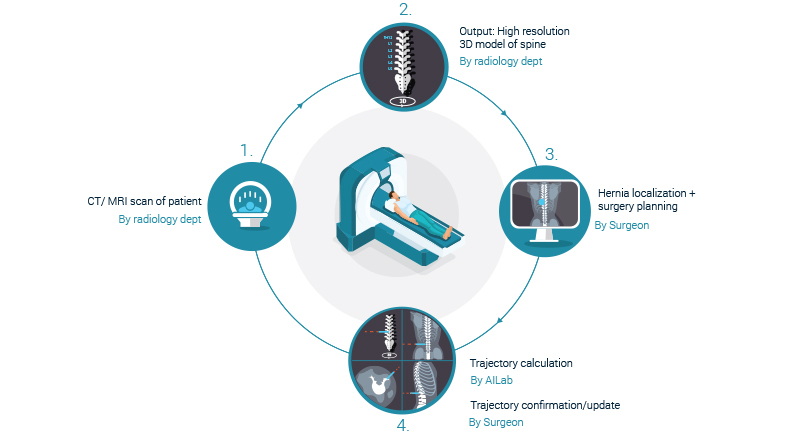

At the start of surgery

At this stage the patient will be laying on his/her stomach. Markers are placed on the patient which are detected by a set of Infra-Red cameras in order to create a 3D model of the patients’ physical position on the operating table. In this new position, a low dose low resolution (supra-millimeter) 2D image is take of the patients’ spine. The 2D image is taken with a C-arm 2D fluoroscopic scanner.

At the start of the surgery the patient is laying on his/her stomach © VERHAERT

At the start of the surgery the patient is laying on his/her stomach © VERHAERT

The 2D image and the external marker localizations are used to transform the high resolution pre-surgery 3D image into a newly reconstructed high resolution 3D image of the spine in its new position and form. At this point, the surgeon and its team has a 3D image of the patients’ anatomy in combination with an external reference frame.

Download the perspective to continue reading on how to use deep learning to develop new robotic surgical applications