From healthcare and manufacturing to space and marketing, machine learning proves to be a great tool to reduce costs, save time, and increase revenue. Managing this process, however, will prove one of the main challenges for businesses in the years to come. Once you’ve identified machine learning as your AI opportunity, there are two primary building blocks for building this model: data and – often overlooked – data labels. Labeling those datasets might be a lot trickier than you thought though. Here are some tips to navigate this challenge.

Collecting datasets

In our previous blog, we defined different steps to discover your AI opportunities. Once you’ve identified the process you want to automate and the information you hope to obtain, you’ll need data to feed the model. These are the camera images, audio signals, text messages, or sensor measurements the model will analyze to provide you with answers to your questions. Whether you are looking to predict the stock market or develop a medical application, having low-quality, biased or unreliable data makes the task impossible. Take for example a study on blood oxygenation levels that fails to consider the difference in sensor response of the pulse oximeter between patients with different skin colors. This would significantly reduce the probability of detecting occult hypoxemia in black patients compared to white patients.

Problem understanding is indispensable to producing a valuable dataset. Your team should understand the variability relevant to defining the problem in practice. Often, people tend to overly bias the dataset toward the most accessible data. A self-driving car whose algorithms are trained only on roads the developers happen to travel regularly is not robust. Not entirely unlike humans, ML algorithms might find it challenging to assess unknown situations. For Machine Learning models, this results in unpredictable model outcomes because machine learning models can’t learn outside the data. So high volumes of information gathered in various circumstances are crucial to developing a trustworthy algorithm.

Finetuning the labeling process

The importance of data as a crucial building block in a machine learning project is gaining recognition. However, apart from raw, high-quality data, a machine learning project is built upon the data labels. They’re the ground truth of your model and represent the outcome your model should output. Think of it like this, a parent won’t just point at items to show their baby, they will also say the name of the item. This way the baby will learn to recognize and name these items in its surroundings. With a machine learning algorithm, this is exactly the same.

Obtaining labels can be complex and labor-intensive. Machine vision problems often require manual labeling for specific objects in each image. Depending on the application, the human labelers must have the appropriate qualifications to label medical scans, images of technical defects or any other specific image type.

Some things to consider during the labeling process:

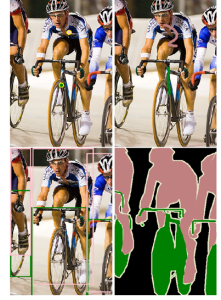

- Different labeling requirements come at different prices. Only requesting a classification label for the complete image is a tenth of the cost of delineating all instances in the picture. The figure below illustrates different labeling approaches.

- While developing the model, it pays off to evaluate the current weaknesses so you know which labels you need to improve. Knowing what the model struggles with allows you to maximize the return on new data.

- When you outsource the labeling task to specialized companies, these are critical suppliers. Your team should treat them as such. You should monitor their results adequately. Too often, the perceived simplicity of the task makes people forget to define strict and well-thought-out quality metrics on the results.

Illustration of different label types. Point annotation (top left) costs less than full mask labelling (bottom right).

Squiggles (top right) and bounding box annotation are in between these extremes. (Source)

Maximizing the return

A dataset of delineated images is necessary to build a model to delineate objects. Currently, techniques are being developed to train models based on weakly supervised data. These techniques aim to use latent information in cheaper, low-information labels to prepare models for high-information output. In the classical approach, models require the same level of information in the labels and the desired result. This is expensive, so you’ll need a human to provide you with ‘examples’ of this valuable output.

Whether you are building an algorithm to read text documents or you are building a self-driving car, the message is the same. You don’t just need data, you need a high volume of qualitative data in all relevant circumstances and you should definitely not forget to gather qualitative labels. Do this and you’ll be one step closer to the optimal solution for your next ML project. Interested in learning more? Subscribe to our AI blog mail or visit the AILab page.

This article was co-written by Jan Alexander.