To differentiate this robotics blog post from others, we will not mention that the term ‘robot’ comes from the Czech word ‘robota’ and it means ‘hard work’. We will also skip mentioning the 3 laws of robotics defined by Isaac Asimov. Briefly, a robot should not harm a human being through action or inaction, it should always obey the orders of a human and protect its existence as long as no harm is done to a human. From a technical viewpoint, which discipline solves the problem of robotics? Mechanical, electrical, electronic, automation, or computer engineering? The answer is yes.

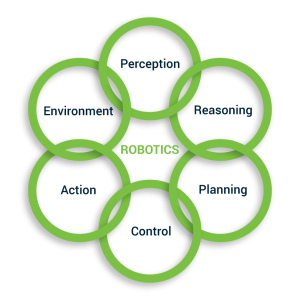

So far it seems that I have not said anything useful, but that cannot be further from the truth. The key takeaway is that robotics is one of the most interdisciplinary fields of the modern day. This blog post presents a breakdown into 6 subcomponents of the field of robotics and describes how artificial intelligence (AI) can impact each one of them.

The cycle of action

Let’s imagine a robot, either a basic factory manipulator or Mr. Schwarzenegger’s beloved Sci-Fi character in the famous human-machine interaction cinema series (for the very young readers, that’s the Terminator franchise). If we take a close look at our robot, we can see an advanced powerful mechanical structure. What if we look closer and strip it naked? We can see actuators, motors, cables, and power drives. How about we use a strong lens when looking at the naked machine? We can observe circuit boards and very thin conductive connections. Let’s go to the quantum level now: here we can witness the physical expression of information bits on the very thin embedded connections and inside the microchips.

My eyes hurt from all that focus, so let’s take a few steps back. What if we look at the robot from very far away, what do we see now? A system that exists in and interacts with a physical environment in a safe, efficient, useful and predictable way and is not a sphere in a void (the favorite joke of our physicist friends).

Robotics model ©VERHAERT

To do this the properties or the state of the environment must be made known to our robotic system. This step is called perception. We, humans, use perception all the time through our 5 senses. Next, we need to find a way to use this information and make a decision through reasoning. The reasoning usually outputs a goal. A robotic arm might see an item and reason that it needs to pick it up. To reach this goal one starts planning a series of steps, for instance how the arm should move to pick up the item. To follow this plan, a set of control inputs are required that almost directly translate into actions. Actions that most of the time affect the environment.

Imagine you are working out on a sunny day and see a bottle of water standing on the table. You reason that you are thirsty and should drink the water. First, your brain needs to plan the movement to grab the bottle and bring it towards your mouth. Secondly, you control your muscles in a certain way for each of these steps to perform actions with parts of your body that lead you to reach your goal. Finally, these actions will act on the environment by changing its state. The bottle of water is no longer in the same location and it contains less water than before. The entire perception-reasoning-planning-control-action cycle is never ending for living beings and machines alike.

Demystifying artificial intelligence

How does AI come into play? First of all, what is it? AI is the new buzzword for ‘algorithm’ and is something that engineers say when they do not want to say what they are doing. All jokes aside, an algorithm is a logical set of steps that solve a problem. The same is with an AI system. It’s an implementation of logic that allows said system to detect, learn, adapt, make decisions, and/or perform actions with minimal human intervention. Maybe not a fully accurate, but at least an intuitive definition of an often misunderstood term.

AI algorithms can be classified as either heuristic or learning methods. Heuristic methods usually offer a logic-based solution (or implementation) and are fit for rather simple problems. They don’t require large amounts of data for creating value. On the other hand, learning methods are much more adaptable to complex problems at the cost of sometimes extreme hunger for data. Deep learning is one of the learning methods that exploded in the past decade as hardware capabilities grew exponentially. Therefore, by augmenting the mechanical structure of a robot with AI, we can obtain autonomous machines. Easy.

Getting pragmatic

The story of autonomous machines is a very nice one, but we are not completely there yet. Almost any step of the ‘cycle of life’ as we defined it earlier, can be augmented with AI methods.

- Perception can translate into situational awareness, voice-to-text, natural language processing, computer vision, scene understanding, or chatbots,

- Reasoning will lead to expert systems and goal optimization,

- Planning enables autonomous navigation and distributed task scheduling,

- Action and control is generated with optimal control strategies and reinforcement learning.

The environment itself can be augmented for AI (for example with visual markers), but that is almost cheating and we are trying to develop systems that adapt to their environment, not the other way around. Sometimes a problem can be simplified substantially by limiting the degrees of freedom in the environment. This can make the difference between an impossible task and a simple solution. Think of this like traffic rules: without them, driving would be a much more complex task to handle by any person. This problem is solved by adding rules that should be followed. But we are sliding out of scope again, check other blog posts for more!

One important thing to remember is that adding intelligence to robots is always on the spectrum. The robotic system has to be as intelligent as the complexity of the environment and the task it needs to handle. Assessing this complexity can be an art by itself, but this is what AI experts are good at.

Realistic goals

At Verhaert, we’re aiming to bring robots from ‘hard workers’ to ‘hard and smart co-workers’. From jocks to scholars, the purely physical plane to the cognitive space and, medical applications to space applications. And of course, not to forget about factory automation (automatic quality control, warehouse logistics, predictive maintenance). Not all AI code has to be developed for autonomous Tesla cars, boats deserve an autopilot too. And we are on it.

If you want to know more about the business or product practicality of AI algorithms, this blog is the right place to be. Feel free to check the other posts for how AI can augment your life.